Testing is a key part of every successful email marketing strategy,

but if you don’t set your success criteria before each test, the test is worthless.

Let’s take a recent test we’ve run for a client, it wasn’t a perfect test as we were testing two things at once, but it gives us a set of results that are worth considering.

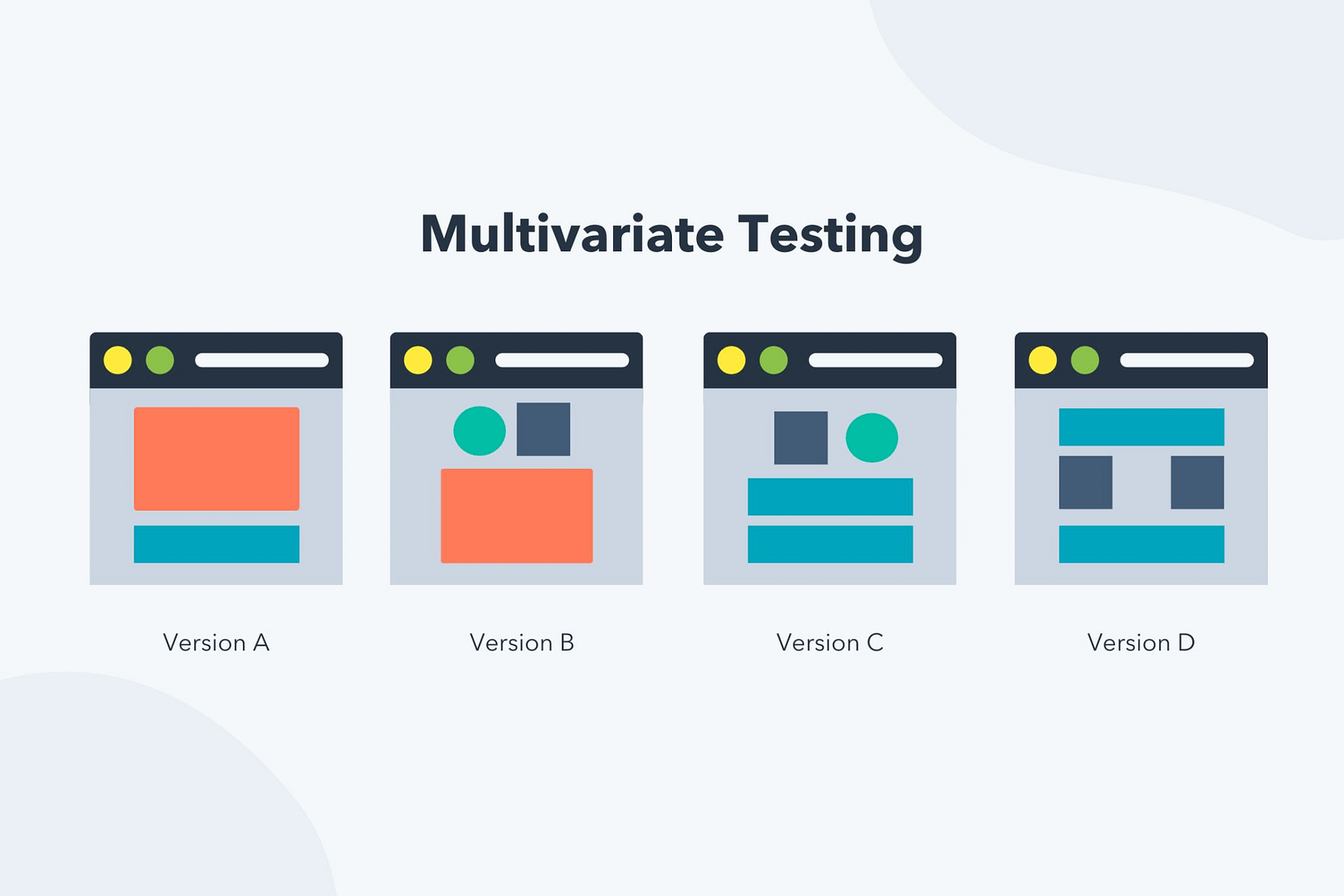

So the two tests we ran side by side were;

- Creative

- Subject line

We ended up with four schedules (A, B, C, D), all were sent at the same time:

- Schedule A – Subject line 1 (SL1), Creative 1 (C1)

- Schedule B – Subject line 1 (SL1), Creative 2 (C2)

- Schedule C – Subject line 2 (SL2), Creative 1 (C1)

- Schedule D – Subject line 2 (SL2), Creative 2 (C2)

Now, here is where it gets interesting;

- When you compare SL1 with SL2 – SL1 was the winner by a narrow margin

- Creatively C1 beat C2 by an equally small leeway

However if you had used your ESP automated MVT tool you would have lost out on orders and revenue;

- When we looked at the subject line winning variants, Click to Order conversion was lower, ABV and revenue per email sent was lower

- The two variants with the lower Unique Click to Open rate had a higher Click to Order conversion rate

This test was so close to be able to call statistically significant, but it is a warning that using ESP/Marketing Automation/Email Marketing platform (such as Klaviyo) automated MVT tools can cost you revenue